Abstract

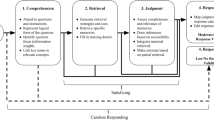

Increasingly colleges and universities use survey results to make decisions, inform research, and shape public opinion. Given the large number of surveys distributed on campuses, can researchers reasonably expect that busy respondents will diligently answer each and every question? Less serious respondents may “satisfice,” i.e., take short-cuts to conserve effort, in a number of ways—choosing the same response every time, skipping items, rushing through the instrument, or quitting early. In this paper we apply this satisficing framework to demonstrate analytic options for assessing respondents’ conscientiousness in giving high fidelity survey answers. Specifically, we operationalize satisficing as a series of measurable behaviors and compute a satisficing index for each survey respondent. Using data from two surveys administered in university contexts, we find that the majority of respondents engaged in satisficing behaviors, that single-item results can be significantly impacted by satisficing, and that scale reliabilities and correlations can be altered by satisficing behaviors. We conclude with a discussion of the importance of identifying satisficers in routine survey analysis in order to verify data quality prior to using results for decision-making, research, or public dissemination of findings.

Similar content being viewed by others

Notes

Due to the dangers of artificially dividing continuous data (Maxwell and Delaney 1993), many of our analyses focus on comparing non-satisficers and very strong satisficers. We do, however, present results across all four quartiles.

Here we use Feldt’s (1969) W statistic to test the significance of differences in coefficient alpha. In evaluating the impact of satisficing on scale reliabilities (RQ 3) and scale correlations (RQ 4) we conduct statistical tests to compare the differences between non-satisficers and very strong satisficers. Our decision here is motivated by the pronounced nature of the differences between these two groups, the positive skew of the satisficing metrics and index, and a desire to avoid the accumulation of Type I error that would result from conducting tests for each difference.

Here we did not consider the higher-level thinking and satisfaction scales of Survey 1, as we did not feel they were long enough to justify computation of a non-differentiation metric.

This is true so long as all respondents do not non-differentiate using the same response anchor (e.g., all respondents in the sample opting for 4 on a 4-point scale). If this were the case, the scale variance would be zero, and Cronbach’s alpha cannot be computed. However, if all respondents non-differentiate and even one respondent opts for an alternate response anchor (e.g., 3 on a 4-point scale), alpha will be 1.0.

References

Dillman, D. A., Smyth, J. D., & Christian, L. M. (2009). Internet, mail, and mixed-mode surveys: The tailored design method (3rd ed.). Hoboken, NJ: Wiley.

Feldt, L. S. (1969). A test of the hypothesis that Cronbach's alpha or Kuder-Richardson coefficient twenty is the same for two tests. Psychometrika, 34, 363–373.

Gonyea, R. M., & Kuh, G. D. (Eds.). (2009). Using NSSE in institutional research [special issue]. In New directions for institutional research, 141. San Francisco, CA: Jossey-Bass.

Krosnick, J. A. (1991). Cognitive demands of attitude measures. Applied Cognitive Psychology, 5, 213–236.

Laster, J. (2010, April 30). Black students experience more online bias than do whites. The Chronicle of Higher Education. Retrieved March 15, 2011, from http://chronicle.com/blogs/wiredcampus/black-students-experience-more-online-bias-than-do-whites/23616.

Marchand, A. (2010, January 21). Cost of college is a big worry of freshmen in national survey. The Chronicle of Higher Education. Retrieved March 15, 2011, from http://chronicle.com/article/Cost-of-College-Is-a-Big-Worry/63671/.

Maxwell, S. E., & Delaney, H. D. (1993). Bivariate median splits and spurious statistical significance. Psychological Bulletin, 113(1), 181–190.

Miller, M. H. (2010, March 16). Students use Wikipedia early and often. The Chronicle of Higher Education. Retrieved March 15, 2011, from http://chronicle.com/blogs/wiredcampus/students-use-wikipedia-earlyoften/21850.

Porter, S. R. (2011). Do college student surveys have any validity? The Review of Higher Education, 35(1), 45–76.

Simon, H. A. (1957). Models of man. New York: Wiley.

Simon, H. A., & Stedry, A. C. (1968). Psychology and economics. In G. Lindzey & E. Aronson (Eds.), Handbook of social psychology (2nd ed., Vol. 5, pp. 269–314). Reading, MA: Addison-Wesley.

Snover, L., Terkla, D. G., Kim, H., Decarie, L., & Brittingham, B. (2010, June). NEASC Commission on Higher Education requirements for documentation of assessment of student learning and student success in the accreditation process: Four case studies. In J. Carpenter-Hubin (Chair), Charting our future in higher education. 50th Annual Forum of the Association for Institutional Research (AIR), Chicago, IL.

Tourangeau, R. (1984). Cognitive sciences and survey methods. In T. Jabine, M. Straf, J. Tanur, & R. Tourangeau (Eds.), Cognitive aspects of survey methodology: Building a bridge between disciplines (pp. 73–100). Washington, DC: National Academy Press.

Tourangeau, R., Rips, L. J., & Rasinski, K. A. (2000). The psychology of survey response. New York: Cambridge University Press.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Barge, S., Gehlbach, H. Using the Theory of Satisficing to Evaluate the Quality of Survey Data. Res High Educ 53, 182–200 (2012). https://doi.org/10.1007/s11162-011-9251-2

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11162-011-9251-2