Abstract

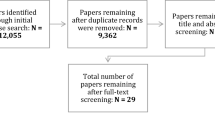

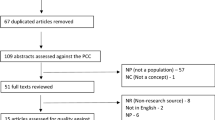

Ongoing transformations in health professions education underscore the need for valid and reliable assessment. The current standard for assessment validation requires evidence from five sources: content, response process, internal structure, relations with other variables, and consequences. However, researchers remain uncertain regarding the types of data that contribute to each evidence source. We sought to enumerate the validity evidence sources and supporting data elements for assessments using technology-enhanced simulation. We conducted a systematic literature search including MEDLINE, ERIC, and Scopus through May 2011. We included original research that evaluated the validity of simulation-based assessment scores using two or more evidence sources. Working in duplicate, we abstracted information on the prevalence of each evidence source and the underlying data elements. Among 217 eligible studies only six (3 %) referenced the five-source framework, and 51 (24 %) made no reference to any validity framework. The most common evidence sources and data elements were: relations with other variables (94 % of studies; reported most often as variation in simulator scores across training levels), internal structure (76 %; supported by reliability data or item analysis), and content (63 %; reported as expert panels or modification of existing instruments). Evidence of response process and consequences were each present in <10 % of studies. We conclude that relations with training level appear to be overrepresented in this field, while evidence of consequences and response process are infrequently reported. Validation science will be improved as educators use established frameworks to collect and interpret evidence from the full spectrum of possible sources and elements.

Similar content being viewed by others

References

Ahmed, K., Jawad, M., Abboudi, M., Gavazzi, A., Darzi, A., Athanasiou, T., et al. (2011). Effectiveness of procedural simulation in urology: A systematic review. Journal of Urology, 186, 26–34.

American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (1999). Standards for educational and psychological testing. Washington, DC: American Educational Research Association.

American Psychological Association. (1966). Standards for Educational and Psychological Tests and Manuals. Washington, DC: American Psychological Association.

Beckman, T. J., Cook, D. A., & Mandrekar, J. N. (2005). What is the validity evidence for assessments of clinical teaching? Journal of General Internal Medicine, 20, 1159–1164.

Berkenstadt, H., Ziv, A., Gafni, N., & Sidi, A. (2006). The validation process of incorporating simulation-based accreditation into the anesthesiology Israeli national board exams. Israel Medical Association Journal: IMAJ, 8, 728–733.

Bloch, R., & Norman, G. (2012). Generalizability theory for the perplexed: A practical introduction and guide: AMEE Guide No. 68. Medical Teacher, 34, 960–992.

Bordage, G. (2009). Conceptual frameworks to illuminate and magnify. Medical Education, 43, 312–319.

Boulet, J. R., Jeffries, P. R., Hatala, R. A., Korndorffer, J. R., Jr, Feinstein, D. M., & Roche, J. P. (2011). Research regarding methods of assessing learning outcomes. Simulation in Healthcare, 6(Suppl), S48–S51.

Cook, D. A., & Beckman, T. J. (2006). Current concepts in validity and reliability for psychometric instruments: Theory and application. American Journal of Medicine, 119, 166.e7–166.e16.

Cook, D. A., Brydges, R., Zendejas, B., Hamstra, S. J., & Hatala, R. (2013). Technology-enhanced simulation to assess health professionals: A systematic review of validity evidence, research methods, and reporting quality. Academic Medicine. doi:10.1097/ACM.0b013e31828ffdcf.

Cook, D. A., Hatala, R., Brydges, R., Zendejas, B., Szostek, J. H., Wang, A. T., et al. (2011). Technology-enhanced simulation for health professions education: A systematic review and meta-analysis. JAMA, 306, 978–988.

Cooper, S., Cant, R., Porter, J., Sellick, K., Somers, G., Kinsman, L., et al. (2010). Rating medical emergency teamwork performance: Development of the Team Emergency Assessment Measure (TEAM). Resuscitation, 81, 446–452.

Cronbach, L. J. (1988). Five perspectives on validity argument. In H. Wainer & H. I. Braun (Eds.), Test validity (pp. 3–17). Hillsdale, NJ: Routledge.

Downing, S. M. (2003). Validity: On the meaningful interpretation of assessment data. Medical Education, 37, 830–837.

Edler, A. A., Fanning, R. G., Chen, M. I., Claure, R., Almazan, D., Struyk, B., et al. (2009). Patient simulation: A literary synthesis of assessment tools in anesthesiology. Journal of Education, Evaluation and Health Profession, 6, 3.

Feldman, L. S., Sherman, V., & Fried, G. M. (2004). Using simulators to assess laparoscopic competence: Ready for widespread use? Surgery, 135, 28–42.

Hatala, R., Issenberg, S. B., Kassen, B., Cole, G., Bacchus, C. M., & Scalese, R. J. (2008). Assessing cardiac physical examination skills using simulation technology and real patients: A comparison study. Medical Education, 42, 628–636.

Hawkins, R. E., Margolis, M. J., Durning, S. J., & Norcini, J. J. (2010). Constructing a validity argument for the mini-Clinical Evaluation Exercise: A review of the research. Academic Medicine, 85, 1453–1461.

Hemman, E. A., Gillingham, D., Allison, N., & Adams, R. (2007). Evaluation of a combat medic skills validation test. Military Medicine, 172, 843–851.

Henrichs, B. M., Avidan, M. S., Murray, D. J., Boulet, J. R., Kras, J., Krause, B., et al. (2009). Performance of certified registered nurse anesthetists and anesthesiologists in a simulation-based skills assessment. Anesthesia and Analgesia, 108, 255–262.

Hesselfeldt, R., Kristensen, M. S., & Rasmussen, L. S. (2005). Evaluation of the airway of the SimMan™ full-scale patient simulator. Acta Anaesthesiologica Scandinavica, 49, 1339–1345.

Holmboe, E. S., Sherbino, J., Long, D. M., Swing, S. R., & Frank, J. R. (2010). The role of assessment in competency-based medical education. Medical Teacher, 32, 676–682.

Huang, G. C., Newman, L. R., Schwartzstein, R. M., Clardy, P. F., Feller-Kopman, D., Irish, J. T., et al. (2009). Procedural competence in internal medicine residents: Validity of a central venous catheter insertion assessment instrument. Academic Medicine, 84, 1127–1134.

Hubbard, R. A., Kerlikowske, K., Flowers, C. I., Yankaskas, B. C., Zhu, W., & Miglioretti, D. L. (2011). Cumulative probability of false-positive recall or biopsy recommendation after 10 years of screening mammography: A cohort study. Annals of Internal Medicine, 155, 481–492.

Irby, D. M., Cooke, M., & O’Brien, B. C. (2010). Calls for reform of medical education by the Carnegie Foundation for the Advancement of Teaching: 1910 and 2010. Academic Medicine, 85(2), 220–227.

Kane, M. T. (2006). Validation. In R. L. Brennan (Ed.), Educational measurement (4th ed., pp. 17–64). Westport: Praeger.

Kardong-Edgren, S., Adamson, K. A., & Fitzgerald, C. (2010). A review of currently published evaluation instruments for human patient simulation. Clinical Simulation in Nursing, 6, e25–e35.

Kogan, J. R., Holmboe, E. S., & Hauer, K. E. (2009). Tools for direct observation and assessment of clinical skills of medical trainees: A systematic review. JAMA, 302, 1316–1326.

Lam, L. L., Cameron, P. A., Schneider, H. G., Abramson, M. J., Muller, C., & Krum, H. (2010). Meta-analysis: Effect of B-type natriuretic peptide testing on clinical outcomes in patients with acute dyspnea in the emergency setting. Annals of Internal Medicine, 153(11), 728–735.

Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33, 159–174.

LeBlanc, V. R., Tabak, D., Kneebone, R., Nestel, D., MacRae, H., & Moulton, C.-A. (2009). Psychometric properties of an integrated assessment of technical and communication skills. American Journal of Surgery, 197, 96–101.

Messick, S. (1989). Validity. In R. L. Linn (Ed.), Educational measurement (3rd ed., pp. 13–103). New York: American Council on Education and Macmillan.

Moher, D., Liberati, A., Tetzlaff, J., & Altman, D. G. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Annals of Internal Medicine, 151, 264–269.

Naik, V. N., Wong, A. K., & Hamstra, S. J. (2012). Review article: Leading the future: Guiding two predominant paradigm shifts in medical education through scholarship. Canadian Journal of Anaesthesia, 59, 213–223.

Ottestad, E., Boulet, J. R., & Lighthall, G. K. (2007). Evaluating the management of septic shock using patient simulation. Critical Care Medicine, 35, 769–775.

Ratanawongsa, N., Thomas, P. A., Marinopoulos, S. S., Dorman, T., Wilson, L. M., Ashar, B. H., et al. (2008). The reported validity and reliability of methods for evaluating continuing medical education: A systematic review. Academic Medicine, 83, 274–283.

Reed, D. A., Cook, D. A., Beckman, T. J., Levine, R. B., Kern, D. E., & Wright, S. M. (2007). Association between funding and quality of published medical education research. JAMA, 298, 1002–1009.

Rosenstock, C., Ostergaard, D., Kristensen, M. S., Lippert, A., Ruhnau, B., & Rasmussen, L. S. (2004). Residents lack knowledge and practical skills in handling the difficult airway. Acta Anaesthesiologica Scandinavica, 48, 1014–1018.

Ruesseler, M., Weinlich, M., Byhahn, C., Muller, M. P., Junger, J., Marzi, I., et al. (2010). Increased authenticity in practical assessment using emergency case OSCE stations. Advances in Health Sciences Education, 15, 81–95.

Schuwirth, L. W., & van der Vleuten, C. P. (2011). General overview of the theories used in assessment: AMEE Guide No. 57. Medical Teacher, 33(10), 783–797.

Schuwirth, L. W., & van der Vleuten, C. P. (2012). Programmatic assessment and Kane’s validity perspective. Medical Education, 46, 38–48.

Stefanidis, D., Scott, D. J., & Korndorffer, J. R., Jr. (2009). Do metrics matter? Time versus motion tracking for performance assessment of proficiency-based laparoscopic skills training. Simulation in Healthcare: Journal of the Society for Simulation in Healthcare., 4, 104–108.

van der Heide, P. A., van Toledo-Eppinga, L., van der Heide, M., & van der Lee, J. H. (2006). Assessment of neonatal resuscitation skills: A reliable and valid scoring system. Resuscitation, 71, 212–221.

Van Nortwick, S. S., Lendvay, T. S., Jensen, A. R., Wright, A. S., Horvath, K. D., & Kim, S. (2010). Methodologies for establishing validity in surgical simulation studies. Surgery, 147, 622–630.

Woolf, S. H., & Harris, R. (2012). The harms of screening: New attention to an old concern. JAMA, 307, 565–566.

Acknowledgments

The authors thank Jason Szostek, MD, Amy Wang, MD, and Patricia Erwin, MLS, for their efforts in article identification. This work was supported by an award from the Division of General Internal Medicine, Mayo Clinic.

Conflict of interest

None.

No human subjects involved

None.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Cook, D.A., Zendejas, B., Hamstra, S.J. et al. What counts as validity evidence? Examples and prevalence in a systematic review of simulation-based assessment. Adv in Health Sci Educ 19, 233–250 (2014). https://doi.org/10.1007/s10459-013-9458-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10459-013-9458-4