Abstract

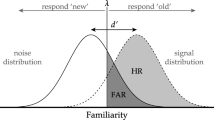

Previously we have proposed a signal detection theory (SDT) methodology for measuring metacognitive sensitivity (Maniscalco and Lau, Conscious Cogn 21:422–430, 2012). Our SDT measure, meta-d′, provides a response-bias free measure of how well confidence ratings track task accuracy. Here we provide an overview of standard SDT and an extended formal treatment of meta-d′. However, whereas meta-d′ characterizes an observer’s sensitivity in tracking overall accuracy, it may sometimes be of interest to assess metacognition for a particular kind of behavioral response. For instance, in a perceptual detection task, we may wish to characterize metacognition separately for reports of stimulus presence and absence. Here we discuss the methodology for computing such a “response-specific” meta-d′ and provide corresponding Matlab code. This approach potentially offers an alternative explanation for data that are typically taken to support the unequal variance SDT (UV-SDT) model. We demonstrate that simulated data generated from UV-SDT can be well fit by an equal variance SDT model positing different metacognitive ability for each kind of behavioral response, and likewise that data generated by the latter model can be captured by UV-SDT. This ambiguity entails that caution is needed in interpreting the processes underlying relative operating characteristic (ROC) curve properties. Type 1 ROC curves generated by combining type 1 and type 2 judgments, traditionally interpreted in terms of low-level processes (UV), can potentially be interpreted in terms of high-level processes instead (response-specific metacognition). Similarly, differences in area under response-specific type 2 ROC curves may reflect the influence of low-level processes (UV) rather than high-level metacognitive processes.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

We have previously used the phrase “response-conditional” rather than “response-specific” [13]. However, [2] used the terms “stimulus-conditional” and “response-conditional” to refer to the type 1 and type 2 tasks. Thus, to avoid confusion, we now use “response-specific” to refer to type 2 performance for a given response type. We will use the analogous phrase “stimulus-specific” to refer to type 2 performance for correct and incorrect trials corresponding to a particular stimulus.

- 2.

Traditionally, S1 is taken to be the “signal absent” stimulus and S2 the “signal present” stimulus. Here we follow [12] in using the more neutral terms S1 and S2 for the sake of generality.

- 3.

We will adopt the convention of placing “S1” and “S2” in quotation marks whenever they denote an observer’s classification of a stimulus, and omitting quotation marks when these denote the objective stimulus identity.

- 4.

These category names are more intuitive when thinking of S1 and S2 as “signal absent” and “signal present.” Then a hit is a successful detection of the signal, a miss is a failure to detect the signal, a correct rejection is an accurate assessment that no signal was presented, and a false alarm is a detection of a signal where none existed.

- 5.

Since hit rate and miss rate sum to 1, miss rate does not provide any extra information beyond that provided by hit rate and can be ignored; similarly for false alarm rate and correct rejection rate.

- 6.

Note that the example ROC curve in Fig. 3.1b is depicted as having been constructed from confidence data (Fig. 3.1a), rather than from direct experimental manipulations on the observer’s criterion for responding “S2”. See the section titled Constructing pseudo type 1 ROC curves from type 2 data below.

- 7.

In principle, since the observer should always choose the stimulus classification response that is deemed most likely to be correct, then in a two-alternative task he should always judge that the chosen response is more likely to be correct than it is to be incorrect. Intuitively, then, the type 2 decision actually consists in deciding whether the type 1 response is likely to be correct or not, where the standard for what level of confidence merits being labeled as “likely to be correct” is determined by a subjective criterion than can be either conservative or liberal. Nonetheless, viewing the type 2 task as a discrimination between correct and incorrect stimulus classifications facilitates comparison with the type 1 task.

- 8.

The analogy is more intuitive when thinking of S1 as “signal absent” and S2 as “signal present”. Then the type 2 analogue of “signal absent” is an incorrect stimulus classification, whereas the analogue of “signal present” is a correct stimulus classification. The type 2 task can then be thought of as involving the detection of this type 2 “signal.”

- 9.

It is also possible to conduct a stimulus-specific analysis and construct stimulus-specific type 2 ROC curves. For S1 stimuli, this would consist in a plot of p(high conf|correct rejection) vs p(high conf|false alarm). Likewise for S2 stimuli—p(high conf|hit) vs p(high conf|miss). However, as will be made clear later in the text, the present approach to analyzing type 2 ROC curves in terms of the type 1 SDT model requires each type 2 (FAR, HR) pair to be generated by the application of a type 2 criterion to two overlapping distributions. For stimulus-specific type 2 data, the corresponding type 1 model consists of only one stimulus distribution, with separate type 2 criteria for “S1” and “S2” responses generating the type 2 FAR and type 2 HR. (e.g. for the S2 stimulus, a type 2 criterion for “S1” responses rates confidence for type 1 misses, and a separate type 2 criterion for “S2” responses rates confidence for type 1 hits.) Thus there is no analogue of meta-d′ for stimulus-specific type 2 data, since d′ is only defined with respect to the relationship between two stimulus distributions, whereas stimulus-specific analysis is restricted to only one stimulus distribution. It is possible that an analysis of stimulus-specific type 2 ROC curves could be conducted by positing how the type 2 criteria on either side of the type 1 criterion are coordinated, or similarly by supposing that the observer rates confidence according to an overall type 2 decision variable. For more elaboration, see the section below titled “Comparison of the current approach to that of [9].”

- 10.

See “Comparison of the current approach to that of Galvin et al. [9]” and footnote 12 for a more detailed consideration of the type 2 decision axis.

- 11.

Provided the assumptions of the SDT model are correct.

- 12.

Of course, our approach must at least implicitly assume a type 2 decision variable within each response type. In our treatment, the implicit type 2 decision variable for each response type is just the distance of x from c. However, for the analysis of response-specific type 2 performance for the equal variance SDT model, distance from criterion and type 2 likelihood ratio are equivalent decision variables. This is because they vary monotonically with each other [9], and so produce the same type 2 ROC curve [5, 21].

- 13.

When the multiple points on the type 1 ROC curve are obtained using confidence rating data, it is arguably preferable to calculate d′ and c only from the (FAR, HR) pair generated purely by the observer’s type 1 response. The remaining type 1 ROC points incorporate confidence rating data and depend on type 2 sensitivity, and so estimating d′ on the basis of these ROC points may confound type 1 and type 2 sensitivity. See the section below titled “Response-specific meta-d′ and the unequal variance SDT model”.

- 14.

Note that for this model, it is possible for x and x 2 to be on opposite sides of the type 1 decision criterion c (see, e.g. Fig. 3.5a, b). This is not problematic, since only x is used to provide the type 1 stimulus classification. It is also possible for x 2 to surpass some of the type 2 criteria on the opposite side of c. For instance, suppose that x = −0.5, x 2 = +0.6, c = 0, and \( c_{{2,{\text{``}}S2{\text{"}}}}^{{{\text{conf}} = h}} = {{+}0.5} \). Then x is classified as an S1 stimulus, and yet x 2 surpasses the criterion for rating “S2” responses with a confidence of h. Thus, there is potential for the paradoxical result whereby the type 1 response is “S1” and yet the type 2 confidence rating is rated highly due to the relatively strong “S2”-ness of x 2. In this example, the paradox is resolved by the definition of the type 2 decision rules stated above, which stipulate that internal responses are only evaluated with respect to the response-specific type 2 criteria that are congruent with the type 1 response. Thus, in this case, the decision rule would not compare x 2 with the type 2 criteria for “S2” responses to begin with. Instead, it would find that x 2 does not surpass the minimal confidence criterion for “S1” responses (i.e., x 2 > c > \( c_{{2,\,{\text{``}}S1{\text{"}}}}^{{{\text{conf}} = 2}} \)) and would therefore assign x 2 a confidence of 1. Thus, in this case, the paradoxical outcome is averted. But such potentially paradoxical results need to be taken into account for any SDT model that posits a potential dissociation between x and x 2.

- 15.

Note that the values for d′ and c recovered by EV-SDT analysis are slightly different from those used in the generating UV-SDT model due to their differing assumptions about the distribution variances.

- 16.

The value of meta-d′ “S2” at 2.4 was substantially larger than the value of d′ at 1.7, an unusual result as we would typically expect meta-d′ ≤ d′ [13]. However, constraining the RSM-SDT fit such that meta-d′ “S2” ≤ d′ still produced data that gave a reasonable approximation to the z-ROC curve. Fitting the UV-SDT model to the data distributed according to this RSM-SDT fit yielded s = 0.83, demonstrating that even with the constraint that meta-d′ “S2” ≤ d′, RSM-SDT still produced a z-ROC curve with non-unit slope.

- 17.

Note that the nature of the UV-SDT model inherently places constraints upon the set of type 1 and type 2 ROC curves that can be exhibited at the same time, whereas the method for fitting meta-d′ minimizes constraints of type 1 performance upon the type 2 fit. Additionally, the likelihood function for the UV-SDT model is built from pseudo type 1 probabilities of the form p(resp = r, conf = y|stim = s). This is different from the likelihood function for fitting meta-d′, which is built from type 2 probabilities of the form p(conf = y|stim = s, resp = r). Thus, whereas the meta-d′ algorithm is specialized for fitting type 2 data, the fit for the UV-SDT model must account for variance in both type 1 and type 2 responses, entailing potential tradeoffs in the fit. Fitting UV-SDT to the data with a type 2 likelihood function achieves a near perfect fit to the type 2 ROC curves, albeit with a very poor fit to the type 1 ROC curve (data not shown).

References

Balakrishnan JD (1998) Measures and Interpretations of vigilance performance: evidence against the detection criterion. Hum Factors: J Hum Factors Ergon Soc 40(4):601–623. doi:10.1518/001872098779649337

Clarke FR, Birdsall TG, Tanner J (1959) Two types of ROC curves and definitions of parameters. J Acoust Soc Am 31(5):629–630. doi:10.1121/1.1907764

Clifford CWG, Arabzadeh E, Harris JA (2008) Getting technical about awareness. Trends Cogn Sci 12(2):54–58. doi:10.1016/j.tics.2007.11.009

Dorfman DD, Alf E (1969) Maximum-likelihood estimation of parameters of signal-detection theory and determination of confidence intervals–Rating-method data. J Math Psychol 6(3):487–496. doi:10.1016/0022-2496(69)90019-4

Egan JP (1975) Signal detection theory and ROC analysis. Academic Press, New York

Evans S, Azzopardi P (2007) Evaluation of a “bias-free” measure of awareness. Spat Vis 20(1–2):61–77

Fleming SM, Maniscalco B, Amendi N, Ro T, Lau H (in review). Action-specific disruption of visual metacognition

Fleming SM, Weil RS, Nagy Z, Dolan RJ, Rees G (2010) Relating introspective accuracy to individual differences in brain structure. Science 329(5998):1541–1543. doi:10.1126/science.1191883

Galvin SJ, Podd JV, Drga V, Whitmore J (2003) Type 2 tasks in the theory of signal detectability: discrimination between correct and incorrect decisions. Psychon Bull Rev 10(4):843–876. doi:15000533

Green DM, Swets JA (1966) Signal detection theory and psychophysics. Wiley, New York

Kanai R, Walsh V, Tseng C-H (2010) Subjective discriminability of invisibility: a framework for distinguishing perceptual and attentional failures of awareness. Conscious Cogn. doi:10.1016/j.concog.2010.06.003

Macmillan NA, Creelman CD (2005) Detection theory: a user’s guide, 2nd edn. Lawrence Erlbaum

Maniscalco B, Lau H (2012) A signal detection theoretic approach for estimating metacognitive sensitivity from confidence ratings. Conscious Cogn 21(1):422–430. doi:10.1016/j.concog.2011.09.021

Markowitz J, Swets JA (1967) Factors affecting the slope of empirical ROC curves: comparison of binary and rating responses. Percept Psychophysics 2(3):91–100. doi:10.3758/BF03210301

Mueller ST, Weidemann CT (2008) Decision noise: an explanation for observed violations of signal detection theory. Psychon Bull Rev 15(3):465–494. doi:18567246

Ogilvie JC, Creelman CD (1968) Maximum-likelihood estimation of receiver operating characteristic curve parameters. J Math Psychol 5(3):377–391. doi:10.1016/0022-2496(68)90083-7

Rounis E, Maniscalco B, Rothwell JC, Passingham RE, Lau H (2010) Theta-burst transcranial magnetic stimulation to the prefrontal cortex impairs metacognitive visual awareness. Cogn Neurosci 1(3):165–175. doi:10.1080/17588921003632529

Schulman AJ, Mitchell RR (1966) Operating characteristics from Yes-No and Forced-Choice procedures. J Acoust Soc Am 40(2):473–477. doi:10.1121/1.1910098

Swets JA (1986) Indices of discrimination or diagnostic accuracy: their ROCs and implied models. Psychol Bull 99(1):100–117. doi:3704032

Swets JA (1986) Form of empirical ROCs in discrimination and diagnostic tasks: implications for theory and measurement of performance. Psychol Bull 99(2):181–198

Swets JA, Tanner WP Jr, Birdsall TG (1961) Decision processes in perception. Psychol Rev 68(5):301–340. doi:10.1037/h0040547

Tanner WP Jr, Swets JA (1954) A decision-making theory of visual detection. Psychol Rev 61(6):401–409

Van Zandt T (2000) ROC curves and confidence judgements in recognition memory. J Exp Psychol Learn Mem Cogn 26(3):582–600

Acknowledgements

This work was supported by Templeton Foundation Grant 21569 (H.L.). We thank Dobromir Rahnev and Guillermo Solovey for comments on an earlier version of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Maniscalco, B., Lau, H. (2014). Signal Detection Theory Analysis of Type 1 and Type 2 Data: Meta-d′, Response-Specific Meta-d′, and the Unequal Variance SDT Model. In: Fleming, S., Frith, C. (eds) The Cognitive Neuroscience of Metacognition. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-45190-4_3

Download citation

DOI: https://doi.org/10.1007/978-3-642-45190-4_3

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-45189-8

Online ISBN: 978-3-642-45190-4

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)