Abstract

Latent class analysis has been used in previous research to compare the configuration of citizenship norms endorsement among students in different countries. This study fits a different model specification, a homogenous model, in order to produce interpretable and comparable unobserved profiles of citizenship norms in different countries. This analysis was conducted using data from IEA’s International Civic and Citizenship Education Study (ICCS) 2016, which includes responses from students in 24 countries in Europe, Latin America, and Asia. The five-class citizenship norms profiles results and the trade-offs in model specifications are discussed in this chapter. The five-class solution presented here is comparable to previous studies assessing citizenship concepts in various settings.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Citizenship norms

- Duty-based citizenship

- Engaged citizenship

- Latent class analysis

- International Civic and Citizenship Education Study (ICCS)

1 Introduction

Large-scale international studies like the International Civic and Citizenship Education Study (ICCS) 2016, coordinated by the International Association for the Evaluation of Educational Achievement (IEA), collect responses from participants in different countries, and generate composite indicators using these responses. The availability of responses from participants across countries is advantageous for making comparisons between different contexts. However, a key problem for large-scale studies is to guarantee comparability across generated indicators. For comparisons across countries to be meaningful, the indicators used to capture the variability of an attribute of interest must present invariant properties. That is, studies assuming measurement equivalence between countries need to provide evidence of this equivalence. When comparisons across countries are the goal, it is necessary to provide evidence of the extent to which a certain indicator is comparable between the different groups. Once equivalence is supported by evidence, then claims regarding the difference (or similarities) between countries, or relationships between covariates (or lack thereof) are interpretable. Without equivalence, differences between countries may be due to unobserved sources of variance other than the attribute being studied, including translation differences in indicators (Byrne and Watkins 2003) and cultural differences involved in the response process (Nagengast and Marsh 2013), among other possible sources.

International large-scale studies rely on complex sample survey designs to support population inferences. This entails the presence of sampling weights and stratification factors, which are additional study design components that should not be ignored. Based on the framework presented in Chap. 2, we propose to address the issue of invariance, while including the sampling design in the computation of our estimates. We investigate whether the endorsement patterns for different normative citizenry indicators are comparable between countries. In particular, we assess whether a comparable model fits the data reasonably well, in contrast with other alternative models. To this end, we use a typological latent class analysis (Hagenaars and McCutcheon 2002; Lazarsfeld and Henry 1968).

A latent class analysis is pertinent for this task as it identifies unobserved groups of respondents, with each group characterized by a specific pattern of response probabilities. Previous findings have found diverse configurations of endorsement of citizenship norms (Hooghe et al. 2016; Reichert 2017), and latent groups that resemble Dalton’s distinction (Dalton 2008) between duty-based and engaged citizenship. Based on the work of Hooghe and colleagues (Hooghe and Oser 2015; Oser and Hooghe 2013) in modeling citizenship norms, and the literature of latent class models for multiple groups (Eid et al. 2003; Finch 2015; Kankaraš and Vermunt 2015; Masyn 2017) we discuss the trade-offs between model fit and the possibility of invariant interpretations based on the analysis of a latent class structure by contrasting solutions under structural homogeneity across countries versus a partially homogeneous solution.

2 Conceptual Background

2.1 Endorsement of Citizenship Norms by Different Citizens

Citizenship norms express what is required from citizens in a given nation. According to previous research, the behavior expected from citizens to be considered “good citizens” includes a varied set of duties, such as obeying the law, voting in elections, and helping others (Mcbeth et al. 2010). However, the pattern of adherence to these sets of norms varies in form, and different citizenship profiles have been developed to fit this pluralism of civic norms. Several authors have studied how adherence to citizenship norms depends on the object of the norm (Dalton 2008), the participation required (Westheimer and Kahne 2004a, b), and the core norms expressed by each profile (Denters et al. 2007). Dalton (2008) divides norms between those that express allegiance to the state, such as obeying the law, and those that express allegiance to the proximal group, such as the support of others, leading to the distinction between duty-based citizenship and engaged citizenship. Westheimer and Kahne (2004a, b), on the other hand, differentiate between levels of involvement, including those who carry out their duties (personally responsible), those who organize actions in the community (participatory citizens), and those who critically assess society (justice-oriented citizens). Denters et al. (2007) differentiate among citizenship models based on core norms: a traditional elitist model (law abiding), a liberal model (deliberation), and a communitarian model (solidarity). In general, it is difficult to model citizenship norms adherence as a single unidimensional construct, as the participant responses often display response patterns that cannot be limited to a single distribution.

To account for the complexity of adherence to citizenship norms, other authors have relied on latent class analysis (Hooghe and Oser 2015; Hooghe et al. 2016; Reichert 2016a, b, 2017). This approach, unlike principal component analysis and factor analysis used in previous research (e.g., Dalton 2008; Denters et al. 2007), allows us to distinguish a set of unobserved groups from a set of observed measures (Masyn 2013). As such, instead of distinguishing dimensions that describe the proclivity of participants to give a higher category response, it identifies the most likely patterns of responses by participants. In this regard, participants are classified as high or low in more than one dimension simultaneously, thus expressing a typology of norms endorsement. With this approach, Hooghe and colleagues (Hooghe and Oser 2015; Hooghe et al. 2016) have consistently identified five patterns: all-around, duty-based, engaged, mainstream, and subject, using data from IEA’s 1999 Civic Education Study (CIVED) and 2009 ICCS, including more than 21 countries. Reichert (2017) found four similar groups, excluding the mainstream group, using data from Australian youth (ages 19–24 years). These later approaches echo the distinction identified by Dalton (2008) between duty-based and engaged citizenship, while also identifying other configurations of citizenship norms endorsement.

2.2 The Present Study

We followed the approach of Hooghe and colleagues (Hooghe and Oser 2015; Hooghe et al. 2016), and fit a series of latent class models to students’ answers on the citizenship norm survey included in ICCS 2016. These items resemble injunctive norms (Cialdini et al. 1991). Each item represents something considered desirable, sanctioned, or expected. In other words, the items describe what people ought to do in contrast to descriptive norms (what people tend to do) (Cialdini and Goldstein 2004). As such, students’ responses do not imply engaging in each action. However, social norms predict the likelihood of students to vote, participate in protests, and obey the law (Gerber and Rogers 2009; Köbis et al. 2015; Rees and Bamberg 2014; Wenzel 2005). Thus, from a normative perspective, identifying how students adhere to different citizenship norms is relevant to understand how endorsement of different norms is configured within the student population, and how these profiles of adherence vary in different contexts.

Unlike previous research, which has relied on partially homogenous model specifications (Hooghe and Oser 2015; Hooghe et al. 2016) to compare citizenship norms adherence between countries, we use a structurally homogenous model. Partially homogenous models include interactions between indicators and country membership, thus allowing item intercepts to vary freely between countries. This model specification is akin to a differential item functioning model (Masyn 2017), where the pattern of response probabilities are allowed to vary across countries, and in that way making them inconsistent with a unified interpretation of the latent group across all countries. As such, partially homogenous models consist of multigroup descriptive models, where only the structure of the latent model is preserved between the compared countries (Kankaraš and Vermunt 2015), while the response pattern of each latent class is not preserved. In contrast, structurally homogenous models imply the same response pattern for each latent class, and only the rates of the latent classes may vary between countries. This allows us to interpret a specific latent class based on the same response pattern in different countries.

3 Method

Data Sources. We used students’ responses from IEA’s ICCS 2016 (Schulz et al. 2018a). This study obtained responses from a representative sample of grade 8 students (average 14 years), using a two-stage probabilistic design with schools as the primary sampling unit, selecting a classroom of students in each school (Schulz et al. 2018b). In 2016, 24 countries participated in the study from Europe (Belgium (Flemish), Bulgaria, Croatia, Denmark, Estonia, Finland, Latvia, Lithuania, Malta, the Netherlands, Norway, Russian Federation, Slovenia, Sweden, North Rhine-Westphalia (Germany)), Latin America (Chile, Colombia, the Dominican Republic, Mexico, and Peru), and Asia (Chinese Taipei, Hong Kong, and Korea).

Variables. The indicators selected in the analysis are those used by Hooghe and colleagues in previous studies (Hooghe and Oser 2015; Hooghe et al. 2016). In ICCS 2016, students had to evaluate the importance of different behaviours in terms of being a good adult citizen, using a four-point Likert-type scale, with the response options of “very important,” “quite important,” “not very important,” and “not important at all.” These indicators, presented in Question 23, are listed in Table 1.

Although the original survey format included the four previously described options, in this study we worked with a binary recoding of the response data. We re-categorized responses as either important (including “very important” and “quite important”) or not important (including “not very important” and “not important at all”). This recoding scheme presents two advantages. It guarantees comparability with previous research on the same items (Hooghe and Oser 2015; Hooghe et al. 2016), and also diminishes cells sparseness (Eid et al. 2003), when there are many indicators and groups.

3.1 Analysis

Our analysis focused on the use of a (structurally) homogeneous model to analyze profiles of good citizenship across countries in order to prioritize the interpretability of international comparisons (Kankaraš and Vermunt 2015). A homogeneous model assumes that it is possible to identify a set of qualitatively distinct classes in the population being studied, each with a characteristic response pattern, which is stable across all countries (i.e., the probability of agreeing to each item within each class remains invariant across countries), while allowing the proportion of people that belong to each class to be country specific (i.e., the probability of observing each class can vary from country to country). In other words, while the model assumes that each class has the same response pattern in each country—thus ensuring comparable interpretations across them—the prevalence of each class can vary from one country to another.

In order to identify the most appropriate model, we relied on a two-step strategy, with a first stage of exploratory analysis focused on the identification of the number of classes, and a second validation stage focused on the replication of the results using the selected model. Accordingly, the full dataset was divided into two randomly selected groups within the primary sampling unit (i.e., the schools).

The selection of the number of latent classes was conducted in the exploratory stage by examining the empirical results from models that considered between 1 and 10 latent classes. However, the interpretation of the results was not solely empirical, as it was also informed by the existing results in the literature by Hooghe and colleagues (Hooghe and Oser 2015; Hooghe et al. 2016).

In accordance with previous studies, we use multiple criteria to determine the final number of classes, including the meaningful interpretation of patterns, as well as statistical indices, such as Akaike information criterion (AIC) and Bayesian information criterion (BIC), the percentage change in the likelihood ratio chi-square statistic, and the level of classification error.

Once the number of classes was selected, we examined the stability of the solution by replicating the same analysis on the validation sample, and, based on these results, we proceeded to examine the response patterns within each class and their similarity to the classes previously reported in the literature (Hooghe and Oser 2015; Hooghe et al. 2016).

We then contrasted the selected solution under the homogeneous measurement model with the more flexible solution offered by a partially homogenous model specification in order to illustrate the costs in terms of interpretability that are associated to the adoption of a model that allows the variation of the patterns of response probabilities within each country and conclude illustrating the characteristics of the classification of individuals across countries.

All estimates were produced using Latent Gold 4.5 software (Vermunt and Magidson 2013), including scaled survey weights (up to a 1000), so each country contributed equally to the estimates (Gonzalez 2012). For standard error estimation, we use Taylor Series Linearization specifying schools as primary sampling unit, and jackzones as their pseudo strata (Asparouhov and Muthén 2010; Stapleton 2013).

4 Results

4.1 Model Selection

We conducted analysis for models considering from 1 latent class to 10 latent classes. We inspected the summary of fit statistics for all these models (see Table 2), to select the most appropriate and interpretable model. The information criteria pointed to models with a larger number of classes than the theoretical expectation of a five-class solution, with the BIC pointing towards a nine-class solution, and the AIC pointing towards the solution with 10 classes. However, when examining the percentage change in the L2 values (likelihood ratio chi-square statistic), it is possible to see that the fit improvement is marginal, varying only around 6% (0.52–0.46) between the models with six and ten classes, while at the same time increasing by about 5% the classification error rate (0.27–0.22). As a result, we focused more closely on the solutions between four and six classes, where we could still observe a larger reduction in the percentage change in the likelihood ratio chi-square statistic, while at the same time maintaining a comparable rate of classification errors (between 0.18 and 0.22 for the four-class and six-class solutions, respectively).

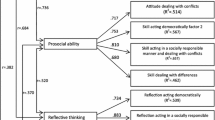

Among these three models, the four and five-class solutions presented classes with markedly different patterns of response probabilities, while the additional pattern added in the six-class model differs mostly in terms of a single indicator related to the history of the country. We inspected the response profile of the five-class model to compare it to previous studies (Fig. 1). The response profile expresses the expected response on each item for each latent class. The expected response were indeed consistent, though not identical, with the classes previously described by Hooghe and colleagues (Hooghe and Oser 2015; Hooghe et al. 2016). In light of these trade-offs, and considering both the theoretical and statistical criteria, we decided to adopt the five-class solution as the basis for the remaining analysis.

4.2 Stability of the Five-Class Solution

In order to confirm the stability of the chosen solution, we fit the five-class model using the validation sample and produced its profile plot (Fig. 2). The results from the exploratory and validation samples are very similar. Their expected probability of responses, presented in the profile plots (Figs. 1 and 2), display an average difference of 0.02 and maximum difference of 0.06. The difference in prevalence of each class between these two samples is also very low, ranging between 0.01 and 0.02 in the expected latent classes. These later results largely resemble the results obtained by Hooghe and Oser (2015) using data from ICCS 2009 (see Table 3).

4.3 Response Patterns

To interpret the latent class solution, we interpret the expected probability of response to each item, conditional to the latent class, which are summarized in the profile plot presented in Fig. 1. We paid special attention to two features of the obtained results; the typical response to indicators from a class, and responses that express class separation, that is response patterns that distinguish groups of respondents (Masyn 2013). To assign names to the generated classes by the selected model we used two criteria. The first criterion is that the latent class names should describe, and not contradict, a feature of the expected response pattern, or its class rate. Thus, if a latent class is named “majority,” then its rates should be higher than the rates of the rest of the latent classes. The second criterion is to choose names from previous literature, as long as they do not fit the first criteria. This later criterion is used to aid theory development, in order to formulate expectations regarding the relations of these different latent classes for further research.

The first pattern presents a consistently higher probability of answering “important” to all the items, thus expressing that all civic norms are important to this class of students. This class matches the “all-around” class reported by Hooghe and Oser (2015). In this study, we have labeled this class as a “comprehensive” understanding of citizenship, as it exhibits probabilities above 0.78 for all items, with the exception of joining a political party. It is worth noting that this item is consistently the least likely to be considered important across all the different classes. “Comprehensive” seems a better term because this class of students valued different forms of civic engagement, including manifest forms of participation such as voting, extra parliamentary actions, peaceful protest, and social involvement, for example by helping in the local community (Ekman and Amnå 2012).

The most contrasting pattern is the class with lowest probability of answering “important” across all items. This pattern matches the “subject” class presented by Hooghe and Oser (2015). We have labeled this group as “anomic,” because it expresses the lowest endorsement of all citizenship norms included, in comparison to the rest of the classes. It comes from the idea of anomie, from the Latin “lack of norms” or normless (Schlueter et al. 2007), “a condition in which society provides little moral guidance to individuals” (Macionis 2018, p. 132). This class of students seems to represent this definition in the most descriptive sense, as a loss of internalized social norms (Srole 1956). Approximately, less than a fifth of the students of this class considered all the citizenship norms included in this study to be important, with the exception of two items: obeying the law and working hard. About four out of ten students from this group consider hard work and obeying the law as desirable attributes for a good citizen. However, this rate of endorsement is too low to be considered typical of this group, as >0.7 or <0.3 of probability of response are more sensible thresholds for typifying a class (Masyn 2013).

The remaining three response patterns lie between these two extremes. The least variable of these three patterns, consistently presents probabilities between 0.52 and 0.71 for all items except the indicator associated with political discussions and joining a political party (with probabilities of 0.38 and 0.33, respectively). This pattern is similar to the “mainstream” class reported by Hooghe and Oser (2015). We have disregarded the mainstream term because this concept may suggest that this class is the largest group between all classes. However, this response pattern accounts for 14–15% of students, thus remaining outside of the most typical class within the full typology. Instead, we have labelled this group as “monitorial” (Hooghe and Dejaeghere 2007), because its response pattern is a mix of valuing non-conventional forms of political participation, while disregarding engaging in political parties (0.33). However, it expresses some political interest by valuing participation in elections (0.67), and values non-institutionalized forms of political participation (Amnå and Ekman 2014), such as peaceful protests against unjust laws (0.58), participating in activities to benefit the local community (0.68), promoting human rights (0.71), and protecting the environment (0.68).

The remaining patterns are characterized for greater variability in the probability of considering certain aspects as important features of citizenship. The fourth pattern shows very high probabilities of considering as important those elements related to the protection of the environment, the protection of human rights, participation in activities that benefit the local community, as well as highly valuing obedience to the law and respect for government representatives (all with probabilities between 0.85 and 0.95). These high probabilities contrast with a lower probability of considering as important participation in political discussions and joining a political party (0.19 and 0.09, respectively). This pattern is similar to the “engaged” class reported by Hooghe and Oser (2015). We have labeled this pattern “socially engaged” instead, in order to emphasize its profile of valuing aspects that involve others.

The fifth and final pattern is the most variable among all the patterns, with high probability of considering as important the items associated with obeying the law, working hard, respecting government authorities (0.95, 0.86, and 0.82, respectively), and voting in every election (0.75). Simultaneously, this class presents lower probabilities of considering important items related to participation in non-institutionalized forms of political participation. This includes activities to protect the environment, activities to benefit people in the local community, and activities to protect human rights (0.50, 0.40, and 0.35, respectively). Likewise, this class presents a very low probability of considering as important items associated with participating in peaceful protests, political discussion, and joining a political party (0.19, 0.19, and 0.13, respectively). This pattern is consistent with the “duty-based” class reported by Hooghe and Oser (2015), and we have decided to maintain the same name in this study.

4.4 A Homogeneous Versus Country Specific Model

Even though the five recovered classes are consistent with the results presented by Hooghe and colleagues (Hooghe and Oser 2015; Hooghe et al. 2016), there is a significant difference between the models used in these previous studies and those used in the present chapter. Hooghe and colleagues adopted a “partially homogeneous model” (Kankaraš et al. 2011; Kankaraš and Vermunt 2015), which effectively allows for country specific variations in the patterns of response probabilities. This model is conceptually akin to allowing for differential item functioning for all items in all countries (Masyn 2017). Although such an approach offers a better statistical fit than the structurally homogeneous models, this comes at the price of a considerably more complex model (156 versus 432 parameters) without a significant improvement in the classification error rates, with a non-substantial difference of 0.006 of classification error difference between these two approaches (Table 4).

Moreover, the main cost of adopting a country-specific model is the interpretation of the response profile across countries. In this model specification, the structure of the expected response is so flexible that it allows the response pattern for each class to diverge significantly from the most likely pattern across countries. We illustrate this variability by producing a profile plot for a country-specific model (Fig. 3). In this profile plot, we overlaid the average pattern across the countries, in contrast to the expected response pattern for one class in each country.

In the country specific model, the expected responses for the monitorial class (or “mainstream” in the terminology of Hooghe) vary significantly. In contrast, in the homogeneous model the expected responses for each class show a single pattern of response across countries. It is clear that while the class labels rely on the overall pattern, the country specific probabilities can diverge significantly from this trend; this variation complicates comparisons across countries that are nominally part of the same class.

4.5 Latent Class Realizations

In order to classify cases into the expected latent classes, we fit the chosen model of five latent classes over the 12 citizenship norm indicator responses of ICCS 2016. To this end, we specified the chosen model in MPLUS v8.4 (Muthén and Muthén 2017) and in Latent Gold 4.5 (Vermunt and Magidson 2013). As before, we included scaled survey weights (up to 1000) for each country and used Taylor Series Linearization to estimate standard errors. Fitting the same model in two different software programs presents certain advantages. It provides evidence of the stability of the results regardless of how algorithms from different software are implemented. It also provides different outputs useful for further use to implement different modes of inferences based on the generated results (Nylund-Gibson and Choi 2018; Nylund-Gibson et al. 2019).

The latent class realizations from both software programs are substantially equivalent. We estimate the prevalence at the population level of the latent class realizations, including the study survey design. The results produce a single difference of 0.01 for the anomic class. The prevalence of the rest of the latent groups produces the same results regardless of the software used to generate the realizations (Table 5). We also inspected the response profile generated by the results of both software programs and found them to be substantially equivalent, displaying a mean difference of 0.01 and a maximum difference of 0.03 (results not shown).

Fit indexes between the two software programs vary, due to how a structurally homogeneous model is fitted in each program. Latent Gold uses a logistic parametrization and includes all the countries as a nominal variable, as essentially different dummy codes conditioning the latent variable of five classes (see Kankaraš et al. 2011). In contrast, Mplus includes groups as known classes, which conditions the latent variable of five classes (e.g., Geiser et al. 2006). As a consequence, Latent Gold has 156 degrees of freedom for this model, while MPLUS uses 179 degrees of freedom, because it requires 23 more parameters, or one for each country “mean” of these known classes, while leaving one country out for reference.

The present book uses Mplus as the software of preference in most of the chapters, so we keep the latent class realizations generated by this later software for further analysis.

4.6 Classification Across Countries

We have discussed the overall results associated with the five-class model using a structurally homogeneous approach, however, it is also important to evaluate the quality of the classification of individual respondents based on this model. Ideally, the model should be able to classify each respondent, with high probability, in one of the classes in the model, and at the very least the assigned class should have a probability above 0.5, as otherwise it is more likely that the respondent belongs “outside” the selected class. In order to examine how the five classes model is classifying individual respondents for each of the countries, we produced boxplots representing the distributions of the modal classification probabilities for respondents in each of the 24 countries (Fig. 4).

Notes BFL = Belgium (Flemish), BGR = Bulgaria, CHL = Chile, COL = Colombia, DNK = Denmark, DNW = North Rhine-Westphalia, DOM = Dominican Republic, EST = Estonia, FIN = Finland, HKG = Hong Kong, HRV = Croatia, ITA = Italy, KOR = Korea, LTU = Lithuania, LVA = Latvia, MEX = Mexico, MLT = Malta, NLD = The Netherlands, NOR = Norway, PER = Peru, RUS = Russian Federation, SVN = Slovenia, SWE = Sweden, TWN = Chinese Taipei

Distribution of modal classification probabilities for the different countries.

Although there is variability across the distributions of the 24 countries, the median classification probability of all countries was above 0.73. On average, countries have 7% of their respondents classified with a probability of less than 0.5, varying from a minimum of just 2% in the case of Korea to the only six countries or regions with 9% or more: Sweden (9%), Malta (9%), Lithuania (9%), Latvia (11%), the Netherlands (11%), and North Rhine-Westphalia (12%). Overall, these model classification levels are sufficiently high to support inference at the country level.

5 Conclusions

Overall, these results support the use of latent class analysis as a modeling alternative that captures the complexity and variability in patterns of response to these items in different countries. The differences between the latent classes, which exhibit unordered, qualitatively distinct response patterns, indicate that these variations are unlikely to be well described by a single unidimensional structure.

We have made the case that it is valuable to analyze and interpret these five patterns in a consistent manner across countries through the use of a homogeneous model, even though better fitting alternatives are available if country specific variation is allowed. The improvement in fit from so-called “partially homogeneous” models allows for the country specific response probability patterns to significantly vary from the overall average pattern that is being interpreted as representative of a given class. We contend that in the face of a trade-off between the meaningful comparison between countries and the improvement of statistical fit, it is worth accepting the shortcomings of a more parsimonious, more constrained, latent class model in order to justify a consistent interpretation of the classes across countries.

Regarding the specific patterns of response probabilities, it is worth noting that the two most prevalent classes are the comprehensive and socially-engaged profiles of good citizenship. These two patterns ascribe the highest levels of importance overall to all the elements considered in the survey, with the notable exception of the low importance that students in the socially-engaged profile give to engaging in political discussions and joining a political party. The high prevalence of participants that tend to assign importance to most of the practices considered in the survey should be considered jointly with the fact that the only class that presents a pattern with consistently low importance given to all these practices, the anomic profile, is not only the smallest class relative to the others, but also a very small class in the absolute sense.

Although our current study is not able to fully evaluate Dalton’s predictions regarding the increment of alternative ways of participation and civic engagement at the expense of the reduction in numbers of people who avoid participation, the results of this study are consistent with this prediction: there are fewer students with duty-based profiles than socially-engaged profiles.

In this regard, we believe particular attention should be paid to the high proportion of participants classified as being in the socially-engaged profile, as this pattern has not been widely studied in the literature. The pattern of highly valuing the promotion of human rights, the protection of the environment, and engagement with local issues and activities is significant, while at the same time a very low perceived importance regarding engagement in traditional political parties is consistent with the original diagnosis proposed by Dalton (2008), and points to a significant proportion of youth and adolescents searching for non-traditional, sometimes grass-roots approaches to dealing with social and global challenges.

References

Amnå, E., & Ekman, J. (2014). Standby citizens: Diverse faces of political passivity. European Political Science Review, 6(2). https://doi.org/10.1017/S175577391300009X.

Asparouhov, T., & Muthén, B. (2010). Resampling methods in Mplus for complex survey data. Mplus Technical Report. http://www.statmodel.com/download/Resampling_Methods5.pdf.

Byrne, B. M., & Watkins, D. (2003). The issue of measurement invariance revisited. Journal of Cross-Cultural Psychology, 34(2), 155–175. https://doi.org/10.1177/0022022102250225.

Cialdini, R. B., & Goldstein, N. J. (2004). Social influence: Compliance and conformity. Annual Review of Psychology, 55(1), 591–621. https://doi.org/10.1146/annurev.psych.55.090902.142015.

Cialdini, R. B., Kallgren, C. A., & Reno, R. R. (1991). A focus theory of normative conduct: A theoretical refinement and reevaluation of the role of norms in human behavior. Advances in Experimental Social Psychology, 24(C), 201–234. https://doi.org/10.1016/S0065-2601(08)60330-5.

Dalton, R. J. (2008). Citizenship norms and the expansion of political participation. Political Studies, 56(1), 76–98. https://doi.org/10.1111/j.1467-9248.2007.00718.x.

Denters, S. A. H., Gabriel, O., & Torcal, M. (2007). Norms of good citizenship. In J. W. van Deth, J. R. Montero, & A. Westholm (Eds.), Citizenship and involvement in European democracies: A comparative analysis (pp. 87–108). https://doi.org/10.4324/9780203965757-12.

Eid, M., Langeheine, R., & Diener, E. (2003). Comparing typological structures across cultures by multigroup latent class analysis: A primer. Journal of Cross-Cultural Psychology, 34(2), 195–210. https://doi.org/10.1177/0022022102250427.

Ekman, J., & Amnå, E. (2012). Political participation and civic engagement: Towards a new typology. Human Affairs, 22(3), 283–300. https://doi.org/10.2478/s13374-012-0024-1.

Finch, H. (2015). A comparison of statistics for assessing model invariance in latent class analysis. Open Journal of Statistics, 05(03), 191–210. https://doi.org/10.4236/ojs.2015.53022.

Geiser, C., Lehmann, W., & Eid, M. (2006). Separating “rotators” from “Nonrotators” in the mental rotations test: A multigroup latent class analysis. Multivariate Behavioral Research, 41(3), 261–293. https://doi.org/10.1207/s15327906mbr4103_2.

Gerber, A. S., & Rogers, T. (2009). Descriptive social norms and motivation to vote: Everybody’s voting and so should you. Journal of Politics, 71(1), 178–191. https://doi.org/10.1017/S0022381608090117.

Gonzalez, E. J. (2012). Rescaling sampling weights and selecting mini-samples from large-scale assessment databases. IERI Monograph Series Issues and Methodologies in Large-Scale Assessments, 5, 115–134.

Hagenaars, J. A., & McCutcheon, A. (Eds.). (2002). Applied latent class analysis. New York, NY: Cambridge University Press.

Hooghe, M., & Dejaeghere, Y. (2007). Does the “monitorial citizen” exist? An empirical investigation into the occurrence of postmodern forms of citizenship in the nordic countries. Scandinavian Political Studies, 30(2), 249–271. https://doi.org/10.1111/j.1467-9477.2007.00180.x.

Hooghe, M., & Oser, J. (2015). The rise of engaged citizenship: The evolution of citizenship norms among adolescents in 21 countries between 1999 and 2009. International Journal of Comparative Sociology, 56(1), 29–52. https://doi.org/10.1177/0020715215578488.

Hooghe, M., Oser, J., & Marien, S. (2016). A comparative analysis of ‘good citizenship’: A latent class analysis of adolescents’ citizenship norms in 38 countries. International Political Science Review, 37(1), 115–129. https://doi.org/10.1177/0192512114541562.

Kankaraš, M., Moors, G., & Vermunt, J. K. (2011). Testing for measurement invariance with latent class analysis. In E. Davidov, P. Schmidt, & J. Billiet (Eds.), Crosscultural analysis Methods and applications (pp. 359–384). https://doi.org/10.4324/9781315537078.

Kankaraš, M., & Vermunt, J. K. (2015). Simultaneous latent-class analysis across groups. Encyclopedia of Quality of Life and Well-Being Research, 1974, 5969–5974. https://doi.org/10.1007/978-94-007-0753-5_2711.

Köbis, N. C., Van Prooijen, J. W., Righetti, F., & Van Lange, P. A. M. (2015). “Who doesn’t?”−The impact of descriptive norms on corruption. PLOS ONE, 10(6), 1–14. https://doi.org/10.1371/journal.pone.0131830.

Köhler, H., Weber, S., Brese, F., Schulz, W., & Carstens, R. (Eds.). (2018). ICCS 2016 user guide for the international database. Amsterdam, the Netherlands: International Association for the Evaluation of Educational Achievement (IEA).

Lazarsfeld, P., & Henry, N. (1968). Latent structure analysis. New York, NY: Houghton-Mifflin.

Macionis, J. J. (2018). Sociology (16th ed.). Harlow, England: Pearson Education Limited.

Masyn, K. E. (2013). Latent class analysis and finite mixture modeling. In T. D. Little (Ed.), The Oxford handbook of quantitative methods (1st ed., Vol. 2, pp. 551–611). https://doi.org/10.1093/oxfordhb/9780199934898.013.0025.

Masyn, K. E. (2017). Measurement invariance and differential item functioning in latent class analysis with stepwise multiple indicator multiple cause modeling. Structural Equation Modeling: A Multidisciplinary Journal, 24(2), 180–197. https://doi.org/10.1080/10705511.2016.1254049.

Mcbeth, M. K., Lybecker, D. L., & Garner, K. A. (2010). The story of good citizenship: Framing public policy in the context of duty-based versus engaged citizenship. Politics and Policy, 38(1), 1–23. https://doi.org/10.1111/j.1747-1346.2009.00226.x.

Muthén, L. K., & Muthén, B. (2017). Mplus user’s guide (8th ed.). Los Angeles, CA: Muthén & Muthén.

Nagengast, B., & Marsh, H. W. (2013). Motivation and engagement in science around the globe: Testing measurement invariance with multigroup structural equation models across 57 countries using. In L. Rutkowski, M. von Davier, & D. Rutkowski (Eds.), Handbook of international large-scale assessment: Background, technical issues, and methods of data analysis (pp. 317–344). Boca Raton, FL: Chapman and Hall/CRC.

Nylund-Gibson, K., & Choi, A. Y. (2018). Ten frequently asked questions about latent class analysis. Translational Issues in Psychological Science, 4(4), 440–461. https://doi.org/10.1037/tps0000176.

Nylund-Gibson, K., Grimm, R. P., & Masyn, K. E. (2019). Prediction from latent classes: A demonstration of different approaches to include distal outcomes in mixture models. Structural Equation Modeling, 00(00), 1–19. https://doi.org/10.1080/10705511.2019.1590146.

Oser, J., & Hooghe, M. (2013). The evolution of citizenship norms among Scandinavian adolescents, 1999–2009. Scandinavian Political Studies, 36(4), 320–346. https://doi.org/10.1111/1467-9477.12009.

Rees, J. H., & Bamberg, S. (2014). Climate protection needs societal change: Determinants of intention to participate in collective climate action. European Journal of Social Psychology, 44(5), 466–473. https://doi.org/10.1002/ejsp.2032.

Reichert, F. (2016a). Students’ perceptions of good citizenship: a person-centred approach. Social Psychology of Education, 19(3), 661–693. https://doi.org/10.1007/s11218-016-9342-1.

Reichert, F. (2016b). Who is the engaged citizen? Correlates of secondary school students’ concepts of good citizenship. Educational Research and Evaluation, 22(5–6), 305–332. https://doi.org/10.1080/13803611.2016.1245148.

Reichert, F. (2017). Young adults’ conceptions of ‘good’ citizenship behaviours: A latent class analysis. Journal of Civil Society, 13(1), 90–110. https://doi.org/10.1080/17448689.2016.1270959.

Schlueter, E., Davidov, E., & Schmidt, P. (2007). Applying autoregressive cross-lagged and latent growth curve models to a three-wave panel study. In K. van Montfort, J. Oud, & A. Satorra (Eds.), Longitudinal models in the behavioral and related sciences (2nd ed., pp. 315–336). New Jersey: Lawrence Erlbaum Associates Inc.

Schulz, W., Ainley, J., Fraillon, J., Losito, B., Agrusti, G., & Friedman, T. (2018a). ICCS 2016 international report: Becoming citizens in a changing world. Cham, Switzerland: Springer.

Schulz, W., Carstens, R., Losito, B., & Fraillon, J. (Eds.). (2018b). ICCS 2016 technical report. Amsterdam, the Netherlands: International Association for the Evaluation of Educational Achievement (IEA).

Srole, L. (1956). Social integration and certain corollaries: An exploratory study. American Sociological Review, 21(6), 709. https://doi.org/10.2307/2088422.

Stapleton, L. M. (2013). Incorporating sampling weights into single- and multilevel analyses. In L. Rutkowski, M. von Davier, & D. Rutkowski (Eds.), Handbook of international large scale assessment: Background, technical issues, and methods of data analysis (pp. 363–388). Boca Raton, FL: Chapman and Hall/CRC.

Vermunt, J. K., & Magidson, J. (2013). LG-Syntax user’s guide: Manual for latent GOLD 5.0 syntax module. Belmont, MA: Statistical Innovations Inc.

Wenzel, M. (2005). Misperceptions of social norms about tax compliance: From theory to intervention. Journal of Economic Psychology, 26(6), 862–883. https://doi.org/10.1016/j.joep.2005.02.002.

Westheimer, J., & Kahne, J. (2004a). Educating the “good” citizen: Political choices and pedagogical goals. Political Science and Politics, 37(02), 241–247. https://doi.org/10.1017/S1049096504004160.

Westheimer, J., & Kahne, J. (2004b). What kind of citizen? The politics of educating for democracy. American Educational Research Journal, 41(2), 237–269. https://doi.org/10.3102/00028312041002237.

Acknowledgements

The authors would like to thank the Chilean National Agency of Research and Development for the complementary support of this research through the grants ANID/FONDECYT N° 11180792, and ANID/FONDECYT N° 1180667.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution-NonCommercial 4.0 International License (http://creativecommons.org/licenses/by-nc/4.0/), which permits any noncommercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2021 International Association for the Evaluation of Educational Achievement (IEA)

About this chapter

Cite this chapter

Torres Irribarra, D., Carrasco, D. (2021). Profiles of Good Citizenship. In: Treviño, E., Carrasco, D., Claes, E., Kennedy, K.J. (eds) Good Citizenship for the Next Generation . IEA Research for Education, vol 12. Springer, Cham. https://doi.org/10.1007/978-3-030-75746-5_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-75746-5_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-75745-8

Online ISBN: 978-3-030-75746-5

eBook Packages: EducationEducation (R0)